How Apple.com will serve retina images to new iPads

One of the more interesting questions raised by the new iPad and its retina display is whether or not web sites should deliver higher resolution images when there is no way to know the connection speed. AppleInsider found that Apple.com is being prepped to deliver high-res images and documented how you can test it in Safari on your desktop.

As you can imagine given my research on responsive images, I was keenly interested to see what approach Apple took.

What they’ve chose to do is load the regular images for the site and then if the device requesting the page is a new iPad with the retina display, they use javascript to replace the image with a high-res version of it.

The heavy lifting for the image replacement is being done by image_replacer.js. Jim Newberry prettified the code and placed it in a gist for easier reading.

The code works similarly to responsive images in that data attributes are added to the markup to indicate what images should be replaced with high-res versions:

<article id="billboard" class="selfclear" data-hires="true">

<a id="hero" class="block" href="/ipad/">

<hgroup>

<h1>

<img src="http://images.apple.com/home/images/ipad_title.png" alt="Resolutionary" width="471" height="93" class="center" />

</h1>

<h2>

<img src="http://images.apple.com/home/images/ipad_subtitle.png" alt="The new iPad." width="471" height="54" class="center" />

</h2>

</hgroup>

<img src="http://images.apple.com/home/images/ipad_hero.jpg" alt="" width="1454" height="605" class="hero-image" />

</a>

</article>

Code language: HTML, XML (xml)Unlike most of the solutions I reviewed last summer, Apple is applying the data-hires attribute to the parent container instead of to the images to themselves. Also, the images borrow from native iOS development and have consistent sizes. So the high-res version of ‘ipad_title.png’ can be found at ‘ipad_title_2x.png’.

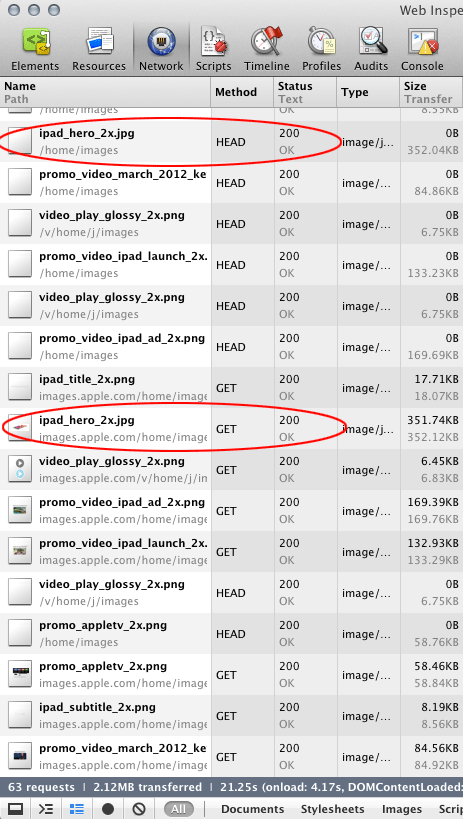

As far as I can tell, there is no attempt to prevent duplicate downloads of images. New iPad users are going to download both a full desktop size image and a retina version as well.

The price for both images is fairly steep. For example, the iPad hero image on the home page is 110.71K at standard resolution. The retina version is 351.74K. The new iPad will download both for a payload of 462.45K for the hero image alone.

The total size of the page goes from 502.90K to 2.13MB when the retina versions of images are downloaded.

Another interesting part of image_replacer.js is that it checks for the existence of 2x images before downloading them:

requestHeaders: function (c) {

var a = this;

if (typeof a._headers === "undefined") {

var b = new XMLHttpRequest();

var a = this;

src = this.hiResSrc().replace(/^http://.*.apple.com//, "/");

b.open("HEAD", src, true);

b.onreadystatechange = function () {

if (b.readyState == 4) {

if (b.status === 200) {

a._exists = true;

var f = b.getAllResponseHeaders();

a._headers = {

src: src

};

var d, e;

f = f.split("r");

for (d = 0;

d < f.length; d++) {

e = f[d].split(": ");

if (e.length > 1) {

a._headers[e[0].replace("n", "")] = e[1]

}

}

} else {

a._exists = false;

a._headers = null

}

if (typeof c === "function") {

c(a._headers, a)

}

}

};

b.send(null)

} else {

c(a._headers, a)

}

},

This is probably necessary as they move to providing two sets of assets in case someone forgets to provide the retina image. It prevents broken images. Unfortunately, it means that there are now three http requests for each assets: a GET request for the standard image, a HEAD request to verify the existence of the retina image, and a GET request to retrieve the retina image.

Another interesting bit of image_replacer.js is when they decide to go retrieve the double-size image:

if ((this.options.debug !== true) && ((typeof AC.Detector !== "undefined" && AC.Detector.isMobile()) || (AC.ImageReplacer.devicePixelRatio() <= 1))) {

Of particular interest is the test for AC.Detector.isMobile(). This is defined in a separate script called browserdetect.js (prettified gist version).

The browserdetect.js is full of user agent string parsing looking for things like operating systems and even versions of OS X. The isMobile() function does the following:

isMobile: function (c) {

var d = c || this.getAgent();

return this.isWebKit(d) && (d.match(/Mobile/i) && !this.isiPad(d))

},

Basically, is this a WebKit browser, does the user agent mention mobile, and let’s make sure it isn’t an iPad. Browsers not using WebKit need not apply.

Testing for yourself

AppleInsider’s instructions on how to test the retina version of Apple.com on your computer are very easy. Open Apple.com in Safari. Go to the console in the Web Inspector and type the following:

AC.ImageReplacer._devicePixelRatio = 2

new AC.ImageReplacer()

You’ll get back a klass object as shown below.

As an aside, notice SVG references in the console screenshot.

What can we learn from this?

Probably not a whole lot for a typical site because our goals will be different than Apple’s.

For Apple, it probably makes more sense to show off how wonderful the screen is regardless of the extra time and bandwidth required to deliver the high-resolution version. For everyone else, the balance between performance and resolution will be more pressing.

There are a few minor things that we can take away though:

- Planning ahead and knowing that you can depend on high-res images being available would be preferable to making extra HEAD requests to check to see if the images exist.

- Setting priority on which images to replace first is a good idea. This is something to look at and borrow from image_replacer.js.

- The retina version of Apple.com’s home page is four times the standard home page. Delivering retina images should be considered carefully.

Perhaps most importantly, Apple isn’t sitting on some secret technique to make retina images work well. Maybe they will provide better solutions in iOS 6. The way they handle images—downloading both sizes plus an additional HEAD request—may be the least efficient way to support retina displays. But for Apple, it likely doesn’t matter as much as it will for your site.

Hat tip to Jen Matson for pointing me to the original AppleInsider article.

Further reading

Jason Grigsby is one of the co-founders of Cloud Four, Mobile Portland and Responsive Field Day. He is the author of Progressive Web Apps from A Book Apart. Follow him at @grigs.